Title: Proof of the satisfiability conjecture for large k

Author and Year: Jian Ding, Allan Sly, and Nike Sun; 2022

Journal: Annals of Mathematics

Consider a graph, which is a set of vertices connected with edges. Your task is to assign two colors to the vertices of the graph, but under the constraint that if vertices share an edge, then they must be different colors. Can you solve this problem and satisfy the constraint? Now suppose that the edges of the graph are chosen randomly; for example, by flipping a coin for every two vertices to determine if there is an edge connecting them. What’s the chance that you can still find a coloring which satisfies the constraint?

Figure 1: The left graph has 4 vertices and can be colored using 2 colors so that new two vertices which share an edge have the same color. The right graph has an additional edge (an extra constraint), and there is no longer a valid coloring using only 2 colors.

The Problem

This is the type of problem studied by Jian Ding, Allan Sly, and Nike Sun in their recent paper in the Annals of Mathematics. Graph coloring is a special case of a Constraint Satisfaction Problem (CSP). Most generally, a CSP consists of a set of variables x1,..,.xn (which can take values from some fixed set) and a set of constraints a1,..,.am. The problem is to assign values to the variables such that the constraints are satisfied. If a solution exists, the problem is said to be satisfiable. In graph coloring, the variables represent the vertices of a graph and the possible values are from a fixed set of colors. For each edge connecting two vertices i j there is a constraint xi xj .

The paper focuses on a different type of CSP called a boolean Satisfiability (SAT) problem. Here the variables can only take two values: +1, -1 (often thought of as True or False). Each constraint is a subset of the variables, each multiplied by +1 or -1. The constraint is satisfied if at least one of the values in the set is equal to +1. For example, the constraint

a ={x1, -x2}

is satisfied if x1=1 or x2= -1.

For a small number of variables and possible values, we could check by hand if there is a solution. But what if there are hundreds of variables and constraints? How could we find a solution, or even determine if one exists? One option is to simply check every possible assignment of values to the different variables. Since each variable can take two values, if there are n variables then there are 2n possible solutions. This means that in the worst case we would have to check an exponential number of solutions (in complexity theory, the problem of finding a solution is called NP-complete).

A different way to understand CSPs is the idea: Sample a random CSP. What’s the chance a solution exists? If the probability is close to 1, then most CSPs have a solution; if the probability is close to 0, then most CSPs do not have a solution.

To be more specific about the type of CSPs to sample, the authors investigate the random k-SAT model. Here there are n variables which can equal either +1 or -1, and a random number of random constraints. The parameter k determines how many variables are included in each constraint. Another parameter controls the average number of constraints – any particular variable is expected to be included in constraints, so on average there is a total of n constraints.

The Conjecture

What is the probability that a sampled instance of the k-SAT model is satisfiable? It is especially interesting to study how this probability depends on the parameters of the model: k, , n. If n is large, and and k relatively small, then there are relatively few constraints (and each constraint involves only a few variables), so we would expect that most problems are satisfiable. If , k become larger, then there are more constraints, so we would expect the probability to decrease.

It turns out that the dependence on the parameters is quite drastic, and there is a sharp change in the behavior of the model. There is a special point *, called a critical value, which depends on k (but not on n), such that if <* then the probability of being satisfiable is close to 1, but if >* then the probability of being satisfiable is close to 0. This satisfiability threshold conjecture was proposed by observing numerical simulations of the model.

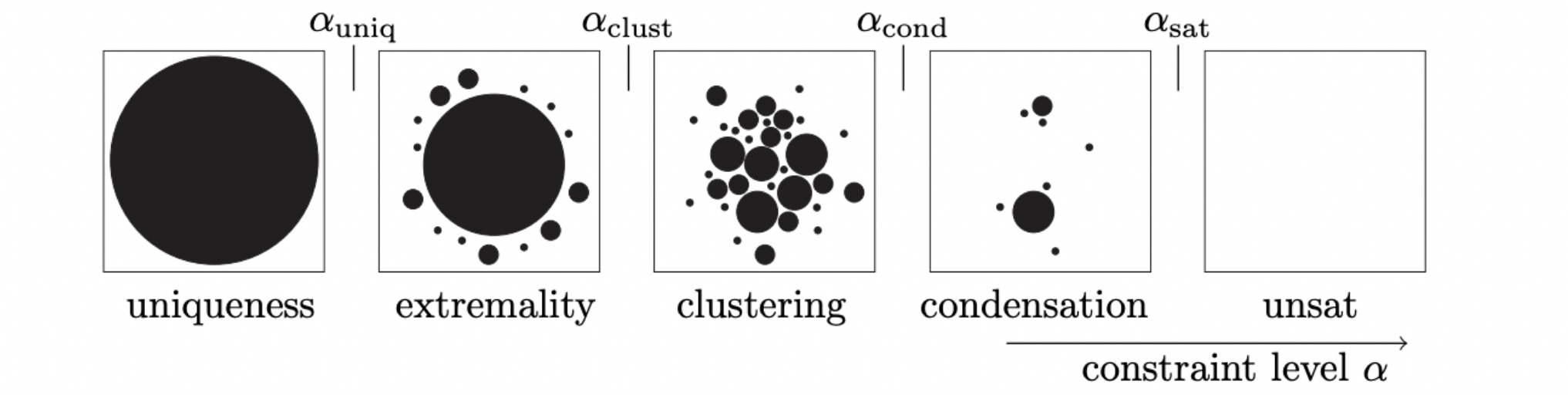

Figure 2: (Figure 1 from the paper) The dark blobs indicate the set of all possible k-SAT problems which have a solution. If is small most problems have a solution. As alpha increases (from left to right), the solution space has interesting behavior; eventually, if alpha > alpha*, then most problems do not have a solution.

The Results

The satisfiability threshold conjecture was previously proven in the case k = 2 and it has been a longstanding open-problem to show it for general k >2. In their 2022 paper, the authors Jian Ding, Allan Sly, and Nike Sun prove the conjecture holds for large k. Their novel techniques rely on viewing a random k-SAT problem as a random graph.

The perspective of the k-SAT problem as a graph allows the authors to utilize tools from other areas of math, and the results have interesting connections with computer science, statistical physics, and probability theory. They prove that the critical value exists as the root of some equation, but there is not an explicit formula. Ultimately, it is a complicated problem which helps answer the question: what’s the chance a random problem has a solution?