Article Title: The A.I. Doctor is In – Application of Large Language Models as Prediction Engines for Improving the Healthcare System

Authors & Year: L.Y. Jiang, X.C. Liu, N.P. Nejatian, M. Nasir-Moin, D. Wang, A. Abidin, K. Eaton, H.A. Riina, I. Laufer, P. Punjabi, M. Miceli, N.C. Kim, C. Orillac, Z. Schnurman, C. Livia, H. Weiss, D. Kurland, S. Neifert, Y. Dastagirzada, D. Kondziolka, A.T.M. Cheung, G. Yang, M. Cao, M. Flores, A.B. Costa, Y. Aphinyanaphongs, K. Cho, and E.K. Oermann (2023)

Journal: Nature [DOI:10.1038/s41586-023-06160-y]

Review Prepared by David Han

Predictive Healthcare Analytics

Physicians grapple with challenging healthcare decisions, navigating extensive information from scattered records like patient histories and diagnostic reports. Current clinical predictive models, often reliant on structured inputs from electronic health records (EHR) or clinician entries, create complexities in data processing and deployment. To overcome this challenge, a team of researchers at NYU developed NYUTron, an effective large language model (LLM)-based system, which is now integrated into clinical workflows at the NYU Langone Health System. Using natural language processing (NLP), it reads and interprets physicians’ notes and electronic orders, trained on both structured and unstructured EHR text. NYUTron’s effectiveness was demonstrated across clinical predictions like readmission (an episode when a patient who had been discharged from a hospital is admitted again), mortality (death of a patient), and comorbidity (the simultaneous presence of two or more diseases or medical conditions in a patient) as well as operational tasks like length of stay and insurance denial within the NYU Langone Health System. Reframing medical predictive analytics as an NLP problem, the team’s study showcases the capability of LLM to serve as universal prediction engines for diverse medical tasks.

![Flow chart showing the steps of the LLM approach for clinical prdeiction. The top left is part a with a Lagone EHR box connected to 2 boxes, NYU Notes(clinical notes) and NYU Fine-Tuning (clinical notes and task specific labels). The top right is the pret-training section with NYU notes (clinical notes) in the far top right connected to a language model box which then connects to a larger masked language model box below it (fill in [mask]: a 39-year-old [mask] was brough in by patient (image representing llm replying) patient)). The bottom left has nyu fine tuning (clinical notes and task specific tasks) in the top right of its quadrant connected to a pretrained model box which connects down to a larger fine-tuning box, specifically the predicted p(label) ground truth pair (0.6, 0.4) which connects to a small box inside the big on lageled loss which goes to another small box labeled weight update which goes back to the pretrained model box. The last quadrant in the bottom right has two boxes on the left of fine-tuned model and hospital ehr(clinical notes) connected to inference engine in the top right of the quadrant that connects down to the email alert (physician) box](https://mathstatbites.org/wp-content/uploads/2024/05/msbHanNyu1-768x797.png)

Development Flow of NYUTron

Figure 1 describes the development of NYUTron in four main steps: data collection, pretraining, fine-tuning, and deployment. Initially, a comprehensive set of unlabeled and labeled clinical notes from the NYU Langone EHR was gathered, including 7.25 million clinical notes from 387,144 patients across four hospitals, forming a 4.1-billion-word corpus collected between 2011 and 2020. Labeled fine-tuning sets consist of 1 to 10 years of inpatient clinical notes (55,791-413,845 patients, 51-87 million words) with task-specific labels. In subsequent steps, a LLM was pretrained and fine-tuned using BERT (Bidirectional Encoder Representation with Transformer) and a masked language modeling (MLM) objective on the NYU Notes dataset. This involves masking words in clinical notes and training the model to fill in the masked words accurately. The pretrained model was then fine-tuned on task-specific datasets to predict labels based on learned relationships from pretraining. Finally, the optimized model was deployed to a high-performance inference engine, interfacing with the NYU Langone EHR for real-time LLM-guided inference (i.e., aforementioned five tasks) at the point of care.

Figure 1. Overview of the LLM-based approach for clinical prediction; a) the NYU Langone EHR was queried for two types of datasets. The pretraining dataset, NYU Notes, contains 10 years of inpatient clinical notes. There are five fine-tuning datasets, each containing 1 to 10 years of inpatient clinical notes with task-specific labels; b) a 109 million-parameter BERT-like LLM was pretrained on the entire EHR using an MLM task; c) the pretrained model was then fine-tuned on specific tasks and validated on held-out retrospective data; d) the fine-tuned model was compressed and loaded into an inference engine (viz., a rule-based component of an A.I. system to deduce new information) to interface with the NYU Langone EHR to read discharge notes when they are signed by treating physicians; adapted from Jiang et al. (2023).

Overall Performance of NYUTron

NYUTron’s versatility was assessed through a retrospective evaluation across several tasks, and it demonstrated improved performance over traditional models in prediction tasks, such as in-hospital mortality, readmission, length of stay, and insurance denial, with the area under the curve (AUC) ranging from 78% to 95%. AUC is a metric used to assess how well a machine learning model performs by measuring the area under the receiver operating characteristic (ROC) curve. A higher AUC indicates better performance. In predicting in-hospital mortality, NYUTron exhibited a median AUC of 95%, which is about a 7.5% improvement from the baseline. In comorbidity index imputation, NYUTron achieved a median AUC of 90%. The model also extended its capabilities to operational endpoints, predicting in-patient length of stay and insurance claim denial on admission, showing consistent improvements over the baselines. An in-depth analysis of NYUTron’s performance focused on predicting 30-day all-cause readmissions. This task involved anticipating a patient’s likelihood of returning to the hospital within 30 days at the point of discharge, a well-explored problem in medical informatics literature. NYUTron demonstrated about 5% improvement compared to the baseline.

Readmission Prediction by NYUTron

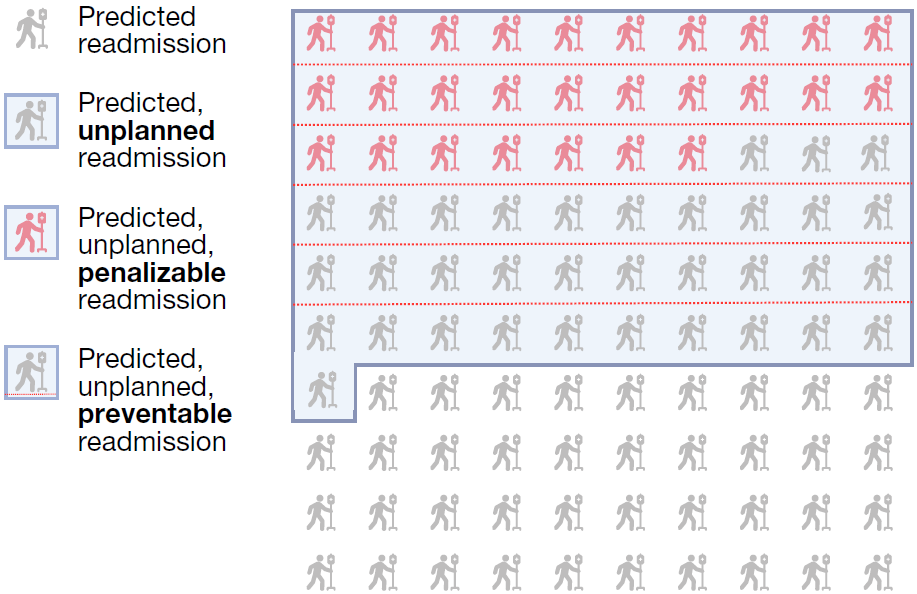

NYUTron’s performance in predicting 30-day readmissions surpassed that of six physicians. NYUTron showed a higher true positive rate, TPR (82%) and the same false positive rate, FPR (11%) when compared with the median physician performance. With full dataset fine-tuning, NYUTron achieved the highest AUC, outperforming models pretrained with non-clinical text and traditional models. The study also highlighted NYUTron’s scalability, demonstrating consistent AUC improvement with more labeled examples. Pretraining on a large set of unlabeled clinical notes enhanced its generalization capability, and NYUTron’s generalizability across different sites within the NYU Langone Health System was confirmed through local fine-tuning. In summary, NYUTron showcased superior performance, scalability, and generalizability, underscoring its potential in predictive healthcare applications. Following positive retrospective trial results, NYUTron’s real-world performance was evaluated in a prospective trial since 2022. Integrated into an EHR inference engine, it analyzed discharge notes for 29,000 encounters, with an 11% readmission rate. NYUTron accurately predicted 82% of readmissions and achieved an AUC of 79%. A qualitative evaluation by 6 physicians on 100 randomly sampled readmission cases showed clinically meaningful predictions, representing preventable readmissions; see Figure 2 for illustration. Predicted readmissions were associated with a 6-fold increase in hospital deaths and a 3-day longer stay. Among unplanned readmissions, 20% experienced adverse events, with 50% deemed preventable. Financially, 82% of unplanned readmissions faced penalties according to the Centers for Medicare and Medicaid Services (CMS) guidelines, and 54% of penalizable cases were considered preventable.

Future & Challenges of Healthcare with A.I.

As demonstrated by NYUTron, LLM allows the machine to understand and interpret the creative, individualized language used by physicians and medical professionals, offering a significant advancement in healthcare A.I. By automating tasks and providing real-time alerts to healthcare providers, NYUTron has the potential to enhance workflow efficiency and enable physicians to spend more time with their patients. The success of NYUTron suggests the feasibility of developing smart hospitals using LLM, where predictive models can be swiftly implemented across the healthcare system. Future studies would explore additional applications, such as extracting billing codes, predicting infection risk, and suggesting appropriate medications. It is important to note that NYUTron is positioned as a support tool for healthcare providers, and its role is to aid decision-making rather than to replace individualized provider judgment for patients. Ethical concerns could rise about potential over-reliance on NYUTron’s predictions, and thus further research is necessary to optimize human-A.I. interactions, assess bias sources, and address unexpected failures. In contrast to the current trends favoring massive generative models, the study advocates for a smaller encoder model pretrained on highly tailored data, emphasizing the importance of high-quality datasets for fine-tuning. Ongoing efforts also involve measuring the similarity between LLM and physicians. Eventually it is envisioned to have A.I. assistants aiding physicians in healthcare improvement but one cannot overstress the need for continuous supervision to monitor the system impact on its behavior and patient health outcomes. The successful deployment of NYUTron in predicting 30-day readmission is seen as a step toward integrating A.I. into healthcare systems, but ultimate validation requires randomized controlled trials for assessing its clinical impact and user feedback.